Google —

Challenging marketers to break through data bias.

ML Fairness / Interactive Microsite

the ask

Demystify data bias to show the importance of DE&I in tech.

As part of an effort to understand some of the key DE&I issues around tech, Google uncovered some important findings around the algorithmic bias in data, and specifically its effects on marketing. They needed a way to clearly introduce marketers to this problem and engage them in a way that would resonate.

Services

the insight

Marketers aren’t thinking about data’s role in diversity.

Now more than ever, modern marketers are increasing their focus on DE&I. That focus often shows up in the places where it’s most visible: representation, casting, direction, and photography. While those are all vital, most marketers don’t understand the bigger issue of how data and bias impact their diversity because it’s often hard to see.

the solution

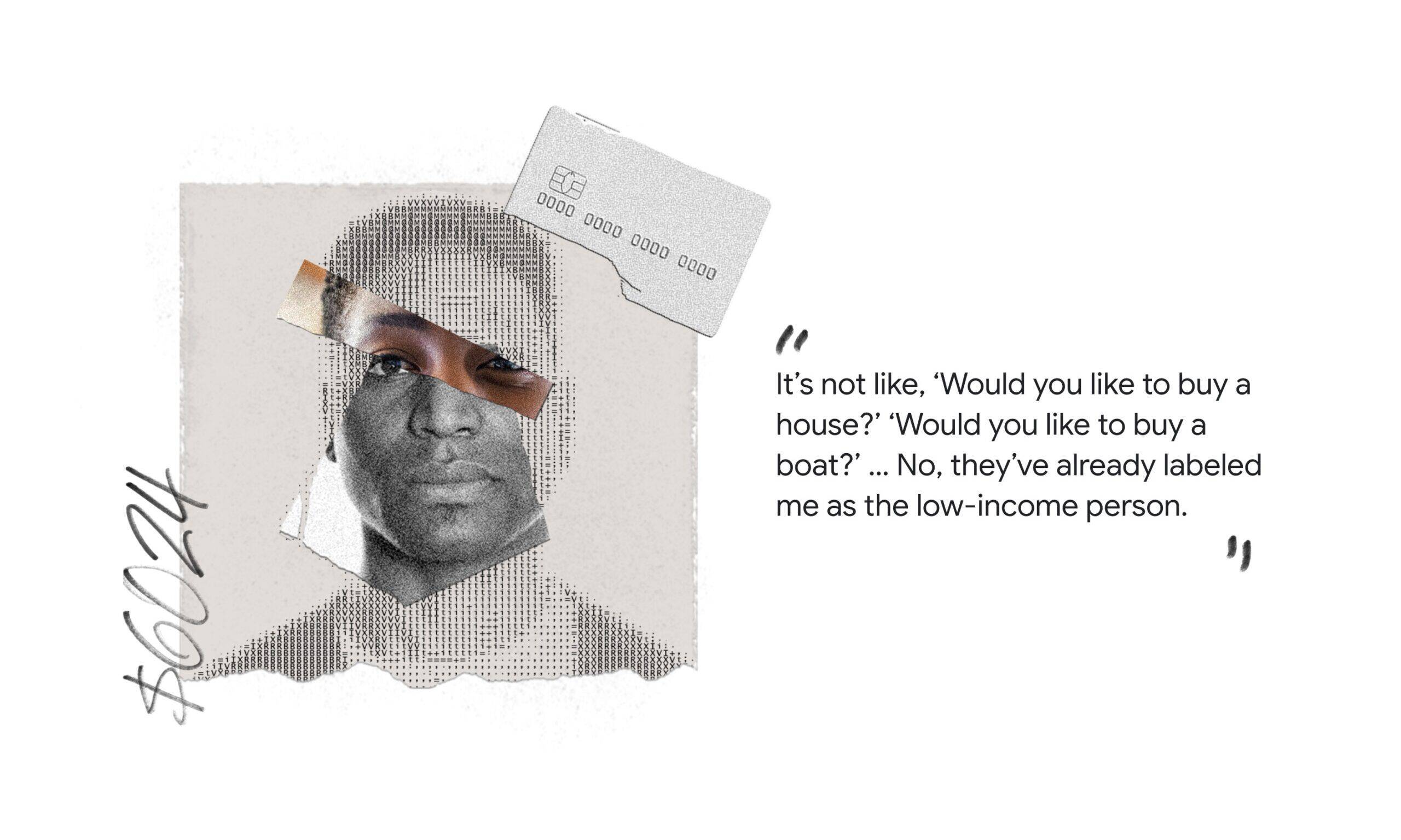

AI-generated portraits that put bias on display.

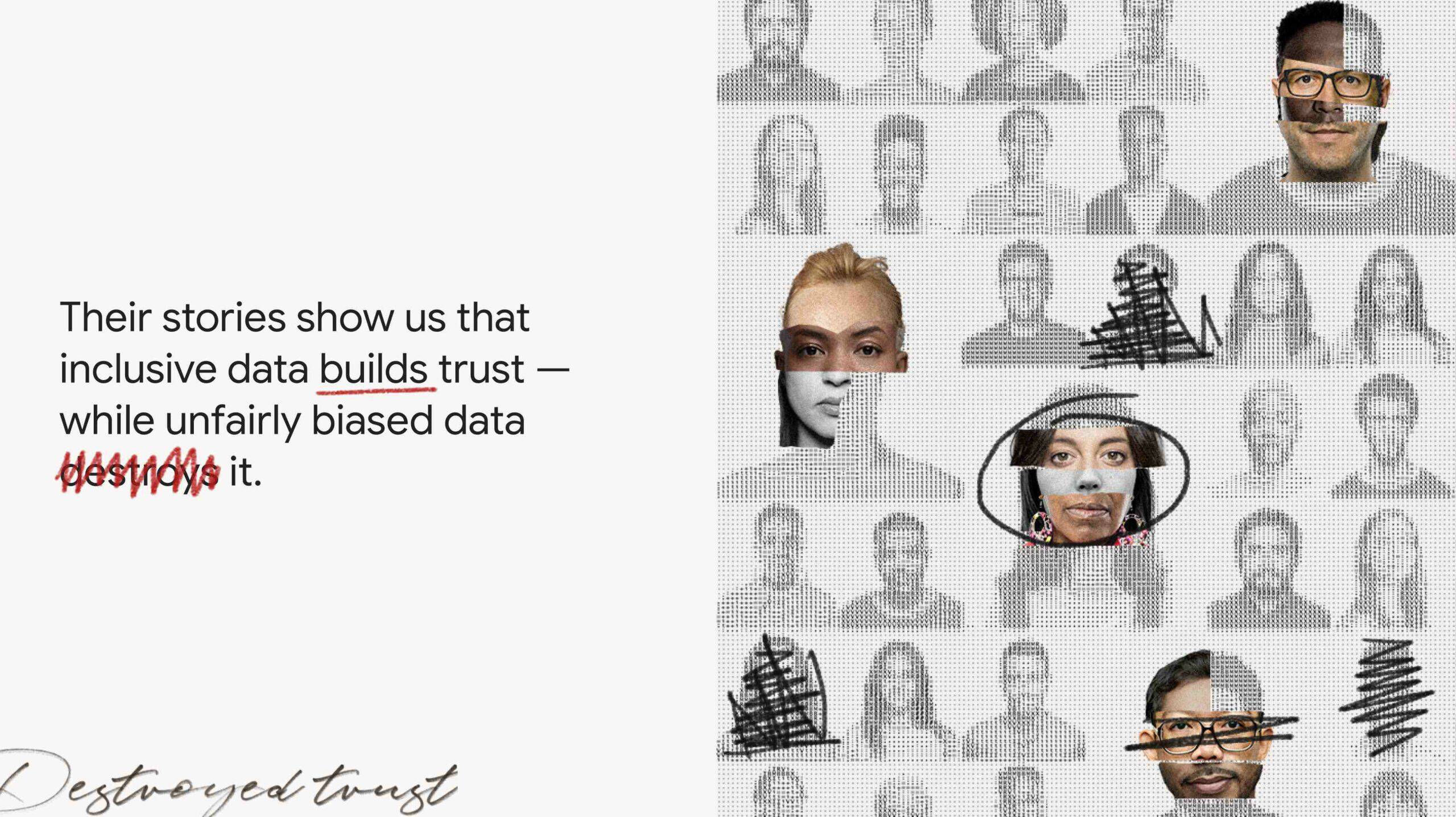

We created a compelling interactive story that used real people’s experiences to humanize key findings from Google’s study, proving algorithms are only as good as the data they’re using. By leveraging a code-generated illustration style, we rendered human portraits with code to show marketers how skewed data distorts the way they see their customers—literally.

The research came to life through a variety of content types, including audio from DE&I leaders.

ASCII portraits are dynamically generated as users scroll through the story.

THE RESULTS

People were called to action.

Google gave it a spot on the front page of Think with Google, supported it with paid media (special treatment for a TwG article) to make sure marketers got the message, and shared the project widely throughout the company. We’re really proud of what we created for this one, and Google was too.